Designing Trust in Conversational AI for Ambiguous Human Input

A conversational AI case study about uncertainty, interpretation, and responsible interaction with personal human input.

Human memory is subjective, incomplete, and often contradictory.

Yet conversational AI systems are increasingly used to interpret personal narratives - from journaling to therapy-adjacent tools.

Liquid Data explores how AI can engage with deeply personal memories without presenting its interpretations as truth.

The challenge wasn’t generating output. It was designing appropriate interpretation.

Design Challenge - How do you build a conversational AI that processes memory without claiming to understand it?

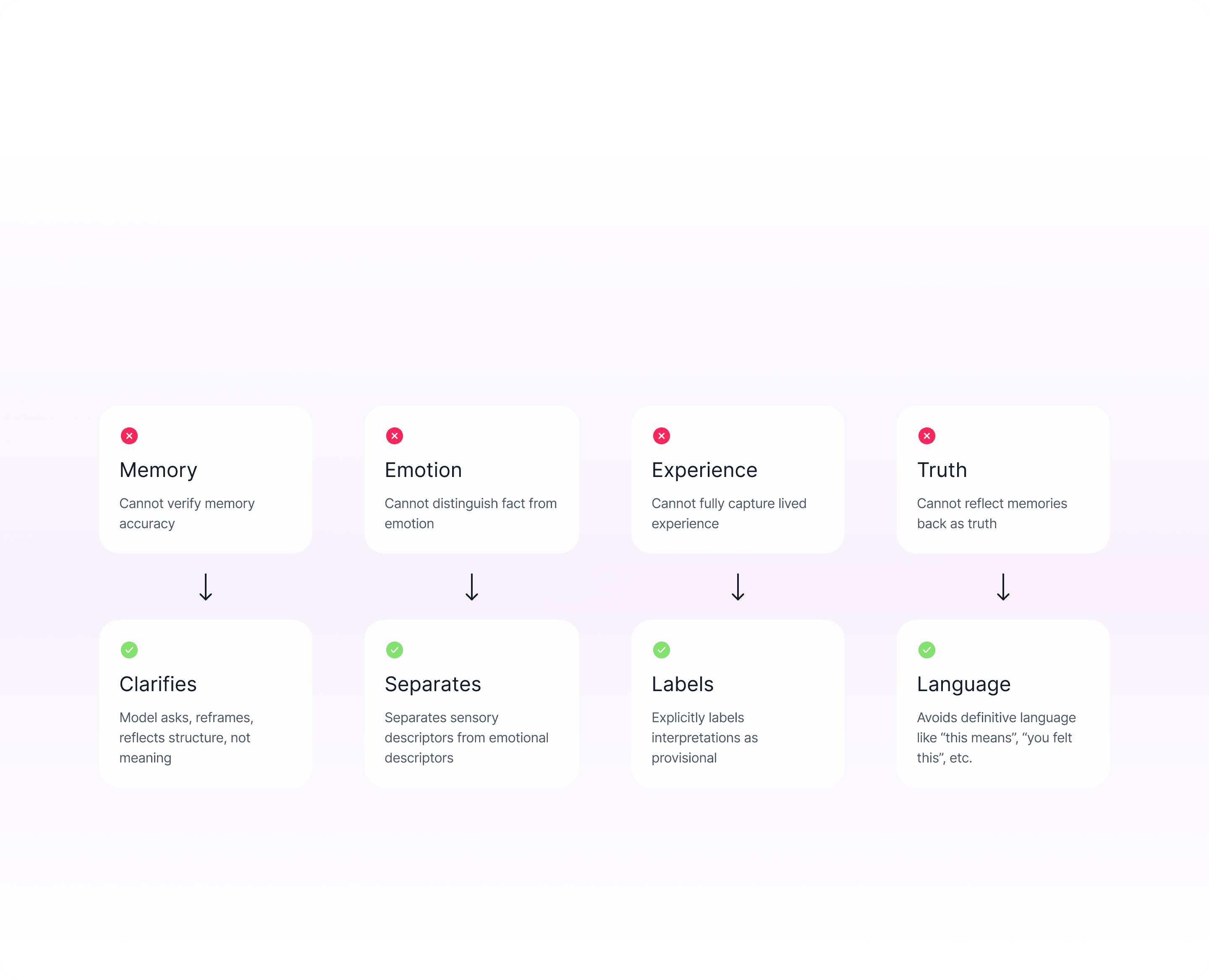

System Constrains

What Broke, What Changed

Transforming conversation from “confession” to “collaboration”

Making uncertainty visible

Trust improved when system felt less certain about outputs

Ambiguity was designed intentionally

Conversational tones helped check model authority

Transparent process description before onboarding to familiarize the users and give them agency over the process

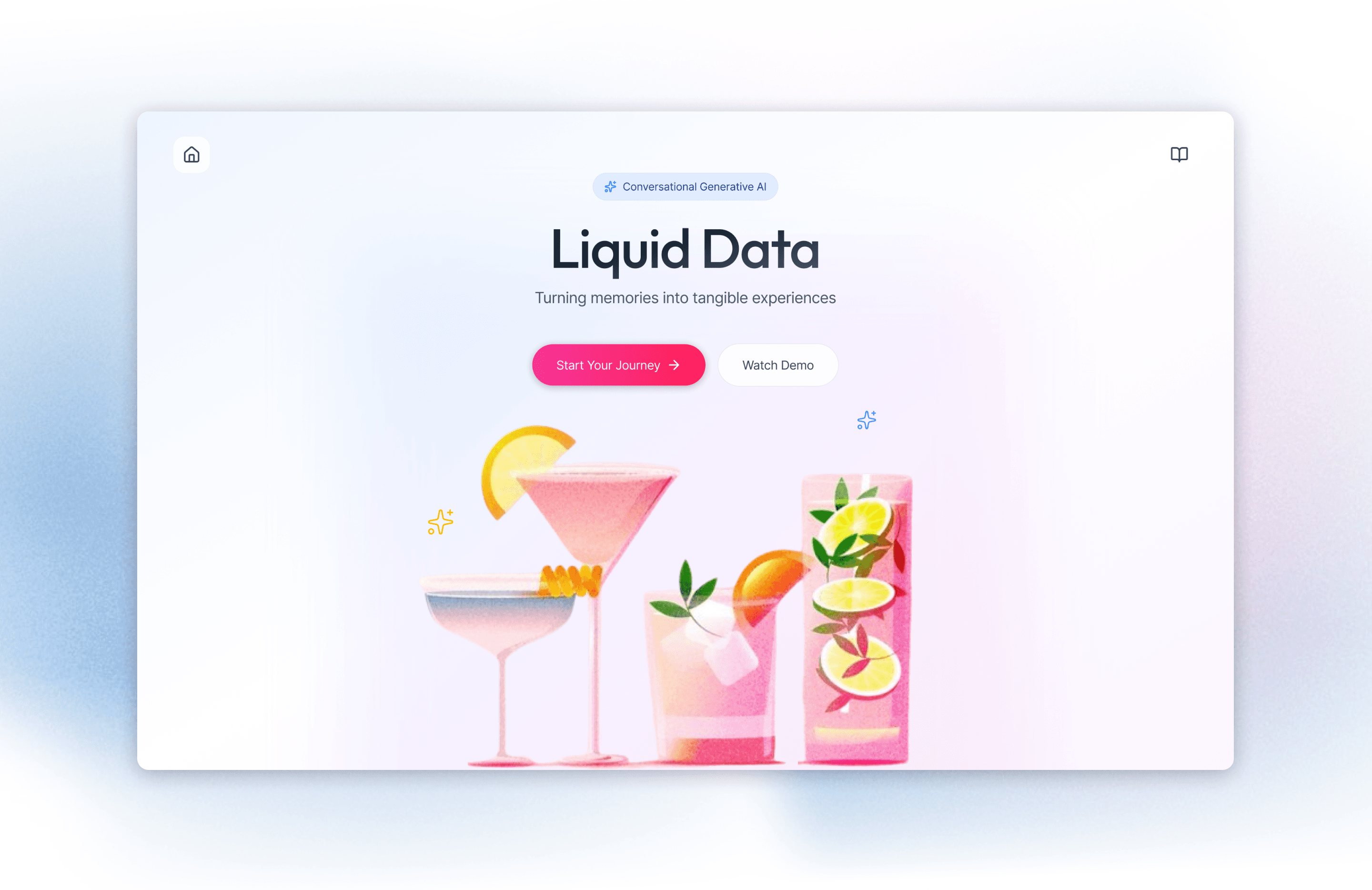

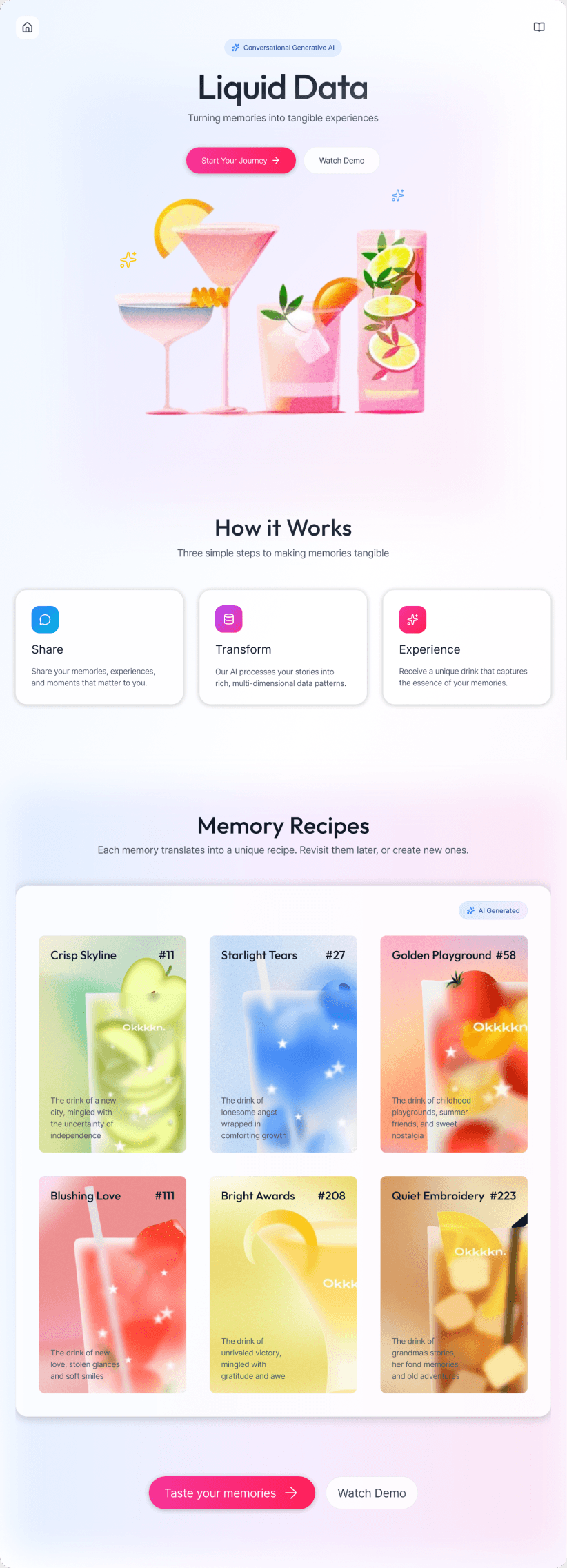

Descriptive, inviting, and warm landing page

Demo → to make the users more comfortable with the system

The AI model used is shown upfront for transparency

Sample output is shown before onboarding for transparency → increasing trust

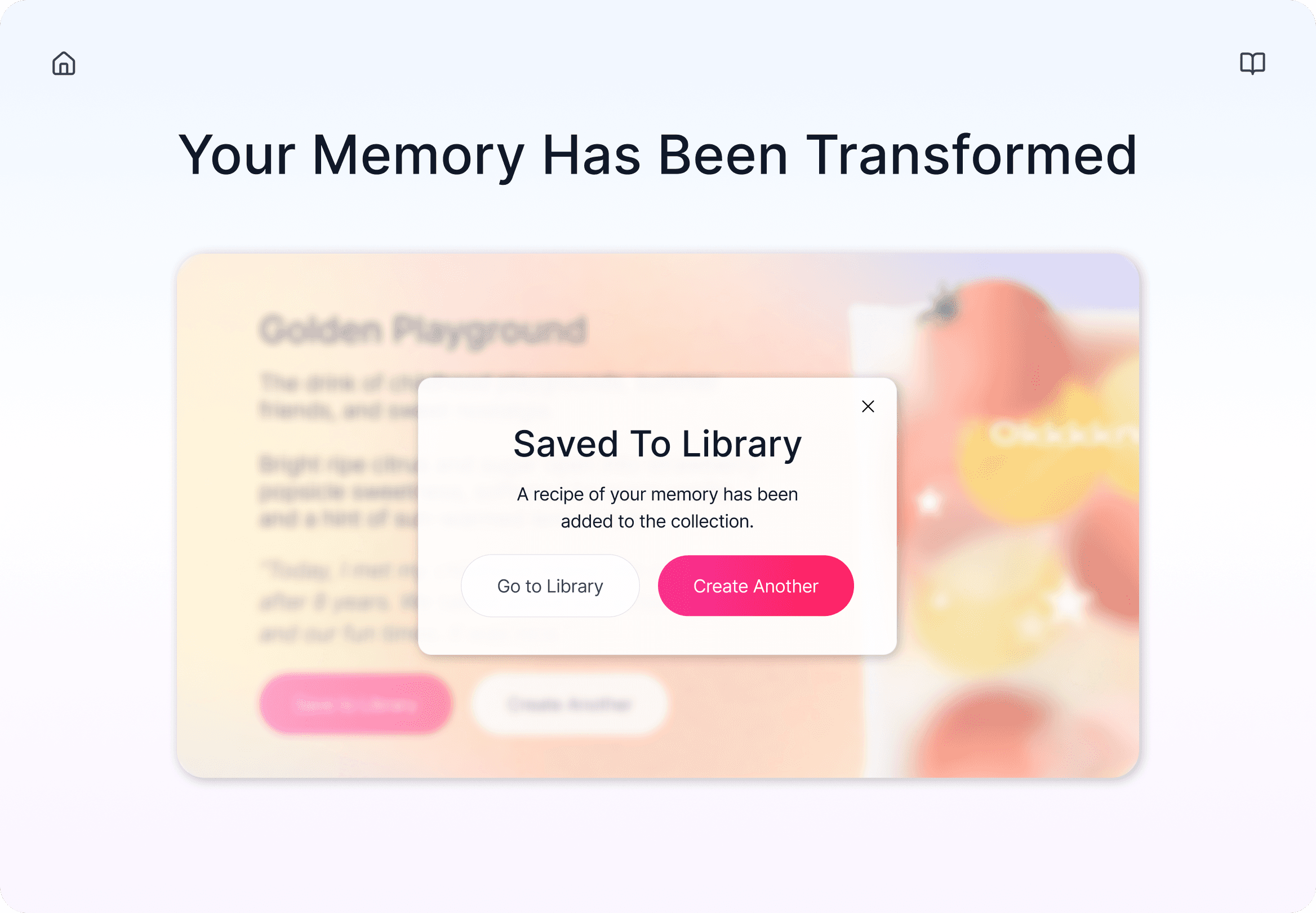

‘AI Generated’ tag to provide awareness, ensuring no information is hidden from the users

Bold, to the point, clutter-free CTA

Soft boundaries in conversational tone to prevent model from assuming therapeutic authority

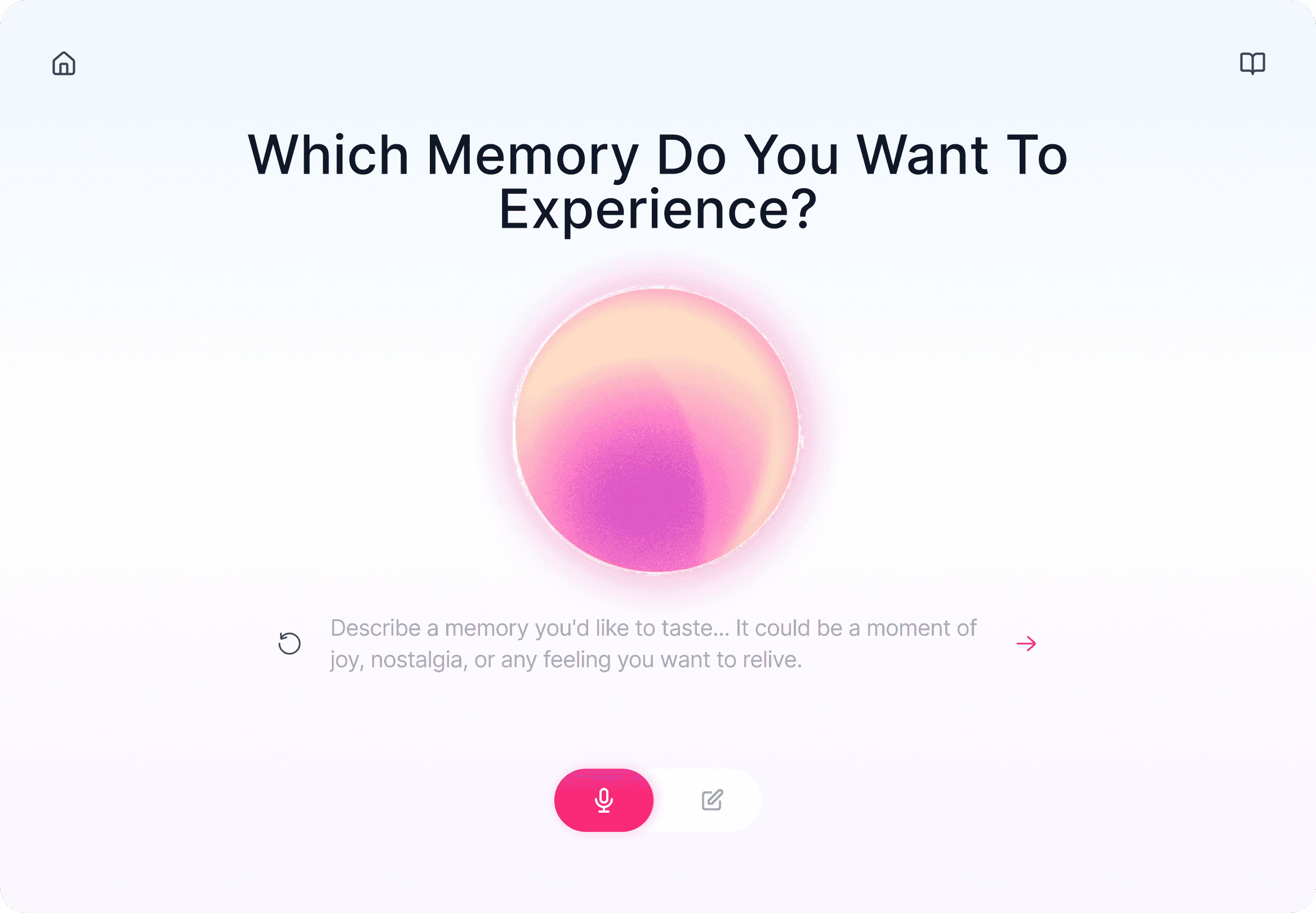

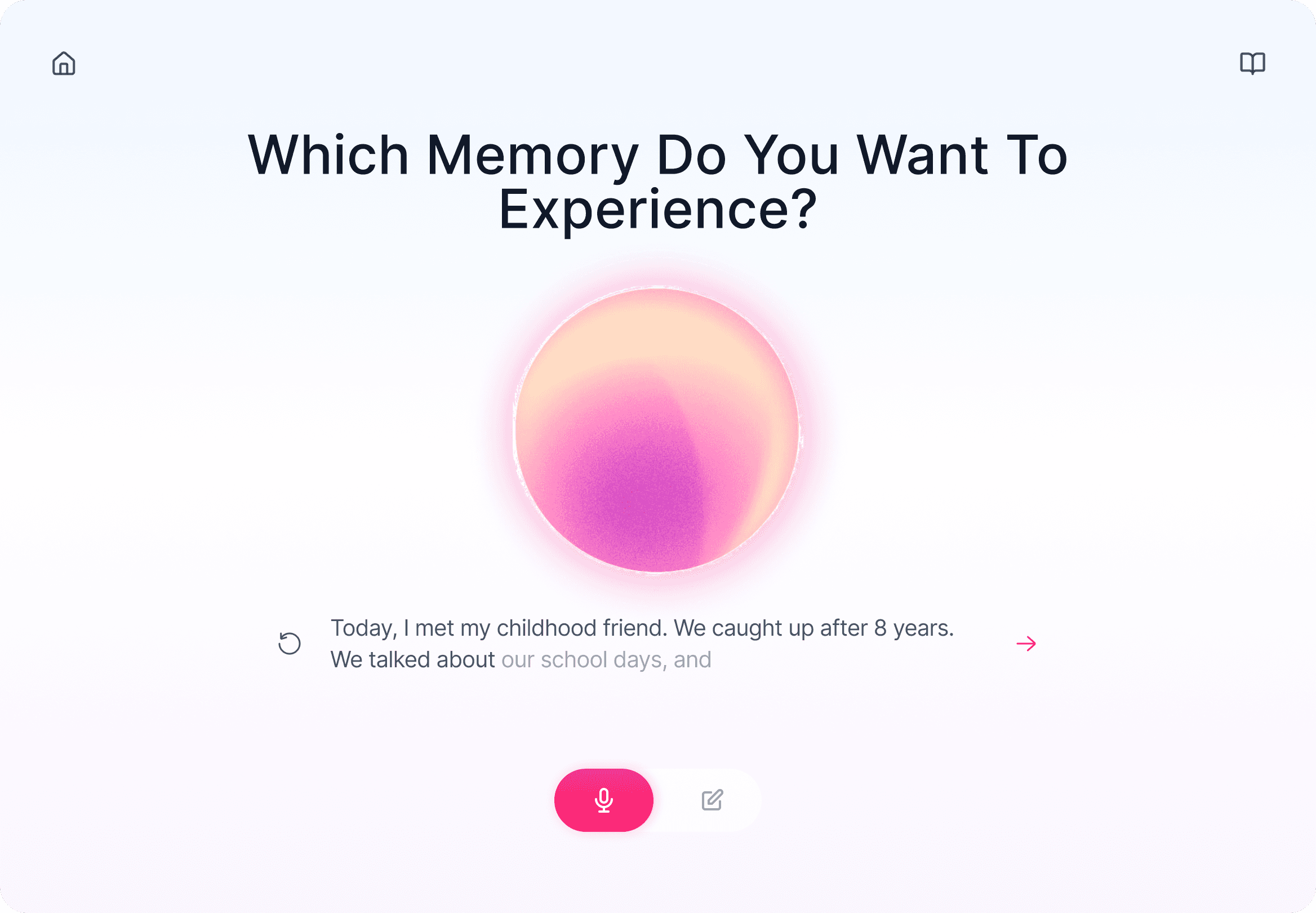

Non-automated input field → agency to users regarding when they want to proceed

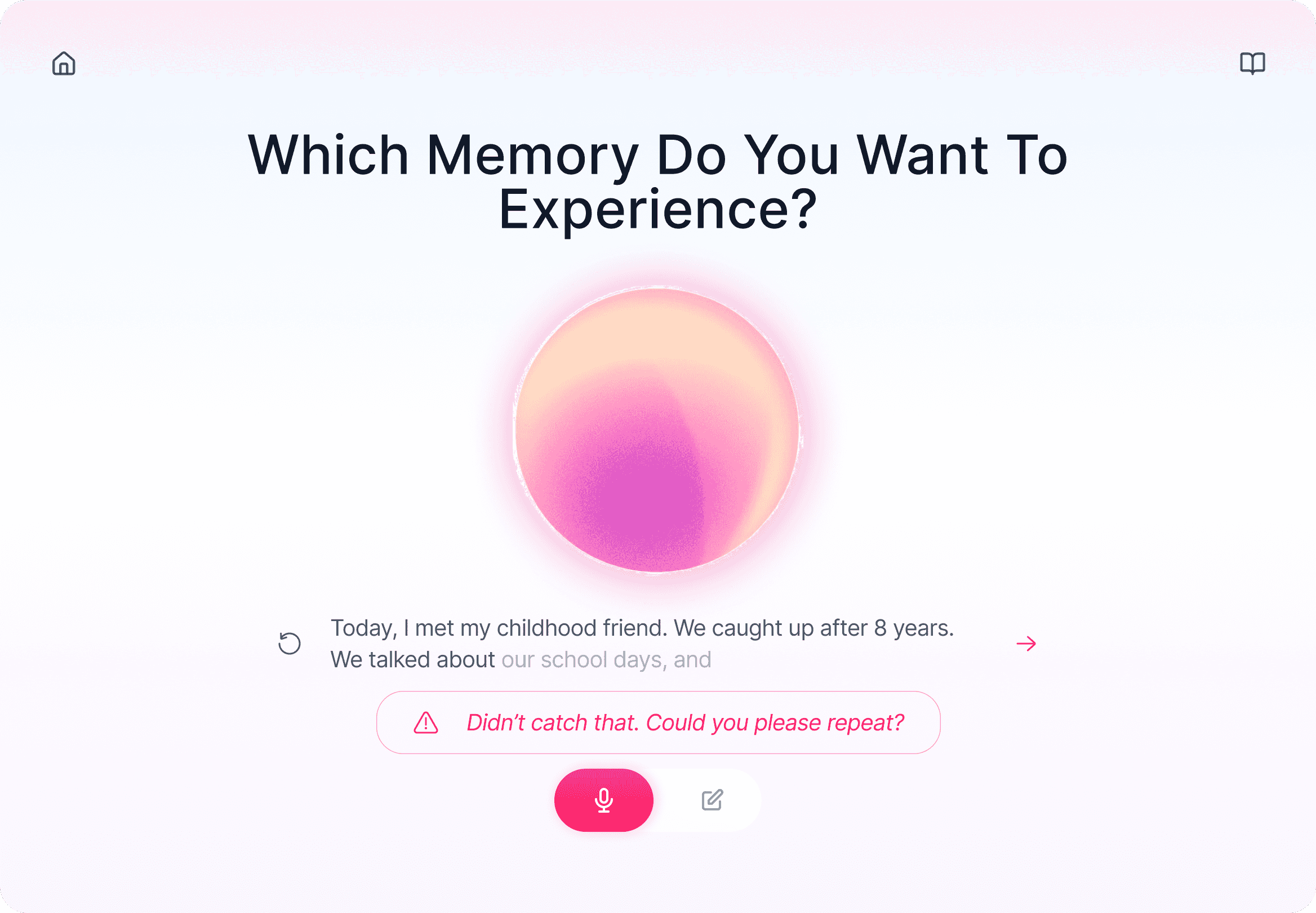

Soft error message for not interrupting flow

Soft error message that sticks to the world-building

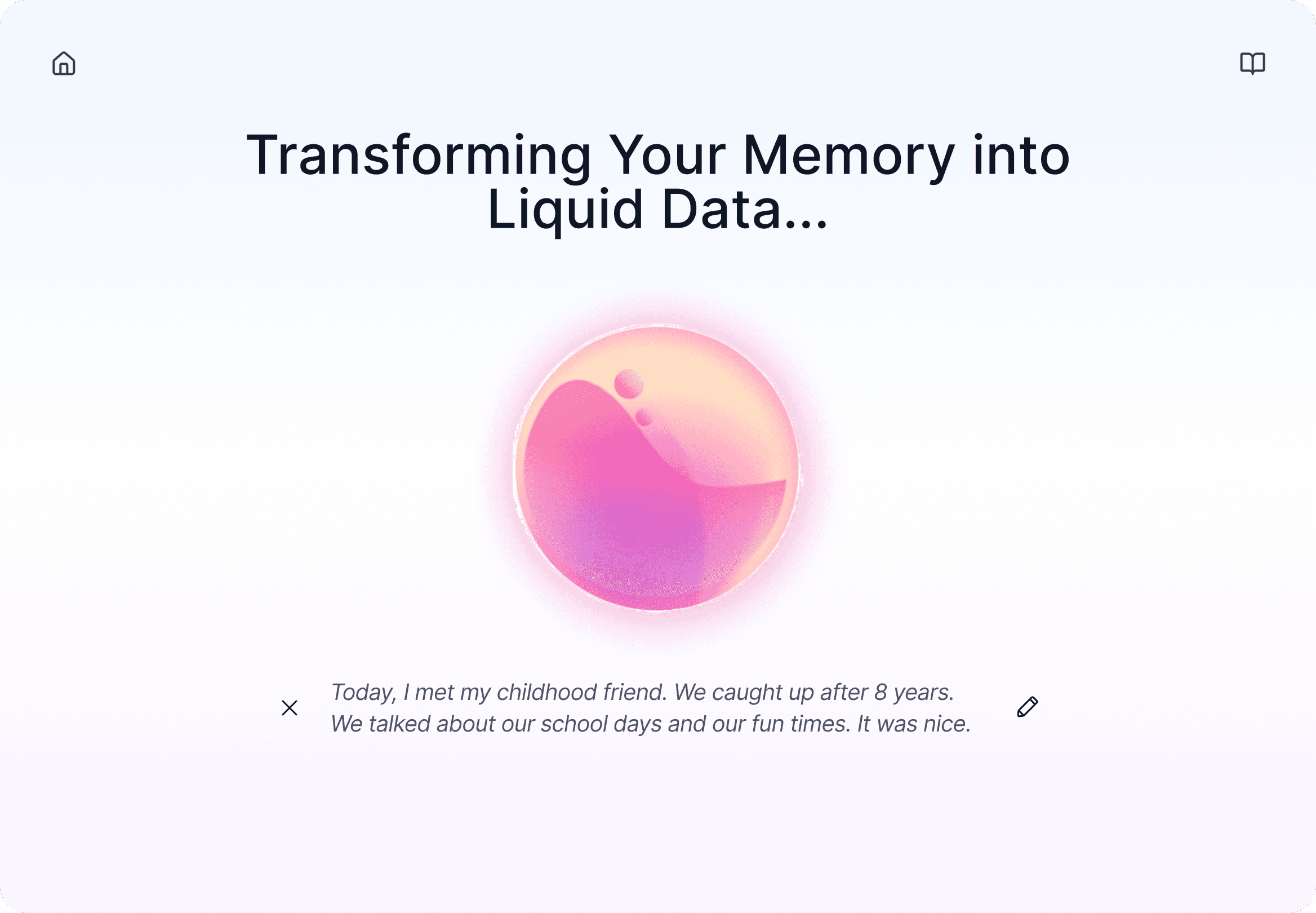

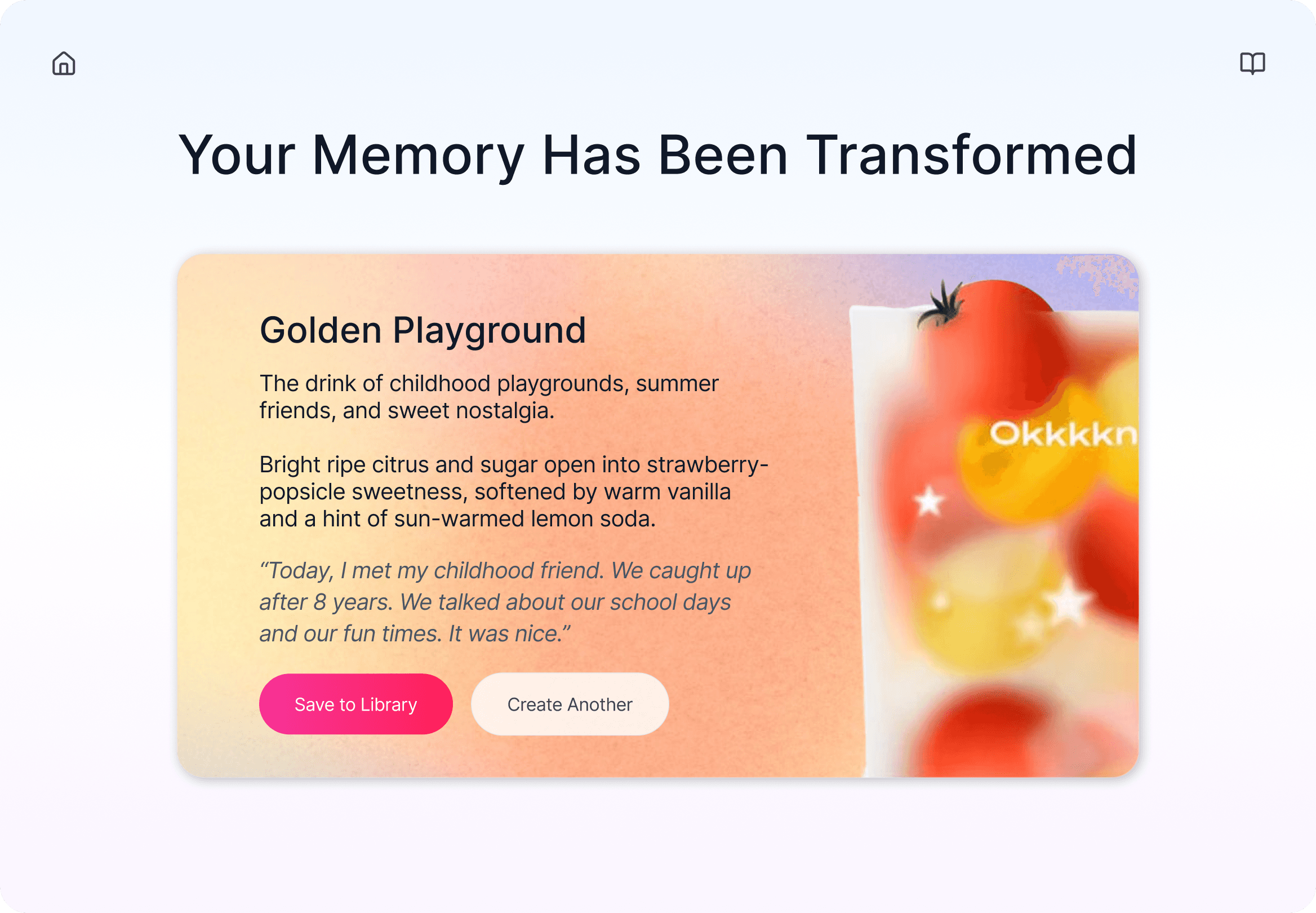

The liquid output is not an understanding of the memory, but a structuring of an abstract input

Provides users control towards what data gets saved for strong agency and more trust

Non-diagnostic labeling to prevent emotional over-attachment to AI

Agency to edit response or exit process for user control

Core Problem When Users Describe Memories + Interface Risks

Riddhi Kasar

© All rights reserved 2025